Can AI Turn Slides into Podcasts? My NotebookLM Experiment

Discover how I used NotebookLM to transform my Geo for Good 2024 slides into an audio podcast. Learn about grounded AI assistants, how to set up your own and what they have to offer. Tune In!!

Let's talk about organization—or the lack of it! 😅 We’ve all been there: rummaging through endless post-it notes 🗒️ and towering stacks of books 📚, desperately hunting for that one crucial detail. Oh, and those browser tabs! You open dozens, confidently telling yourself, “I’ll get back to these... eventually.” Sound familiar? Sure, note-taking has come a long way. We’ve got virtual notes, auto-sync, spellcheck, reminders—everything you can imagine! 💻✨ But even with all these shiny tools, it sometimes feels like we’re still chasing our tails, trying to remember that elusive file name from months ago and failing.

Launched last year as Project Tailwind, NotebookLM is an experimental offering from Google Labs that aims to redefine note-taking in the age of artificial intelligence (AI). It's designed to be your personal AI research assistant, capable of summarizing facts, explaining difficult concepts, and brainstorming new connections. The key differentiator here is NotebookLM's capacity for "source-grounding," meaning you can directly link it to your own source materials like Google Docs, Slides, PDF and markdown files to text you copied from a source.

This grounding process essentially creates a personalized search space that deeply understands the information you're working with. I like to call it “RAG on the Fly” and this blog post is about how you can set up your own notebook.

But why am I bringing this up now? Well, just last week, NotebookLM rolled out a brand-new feature: audio overviews of your sources! 🎧 So naturally, I thought, why not create audio overviews for some of the talks I gave at the Geo for Good Summit in São Paulo, Brazil? And now, thanks to NotebookLM, I’ve turned those into a podcast!

🎧Find it on Spotify here

Introducing RAGs

So what is RAG? A RAG (Retrieval-Augmented Generation) system in the context of large language models (LLMs) combines two powerful tools: a retrieval model and a generation model. The retrieval model searches for relevant information from external sources or databases (or the files you supply), and the generation model (like Gemini Pro) uses this information to craft a coherent and contextually appropriate response.

So what does it mean in real world? Well your answers from their Large Language Models are usually grounded and sourced (augmented) from the sources of data provided to it or made available to it and the model output could point to that. For example an early example was Perplexity that allowed you the model to cite the sources of the search result.

Currently a lot of LLM offerings have a RAG like implementation where you can upload a file or PDF such as Anthropic, Chat GPT premium and even Google AI Studio but they are limited with lack of citation, no concept of continuously querying the same sources and so on.

Well now you know why I call this creating your own RAG on the fly while still using Gemini API in the backend. This update means this is now available in all regions of the world where Gemini API is available.

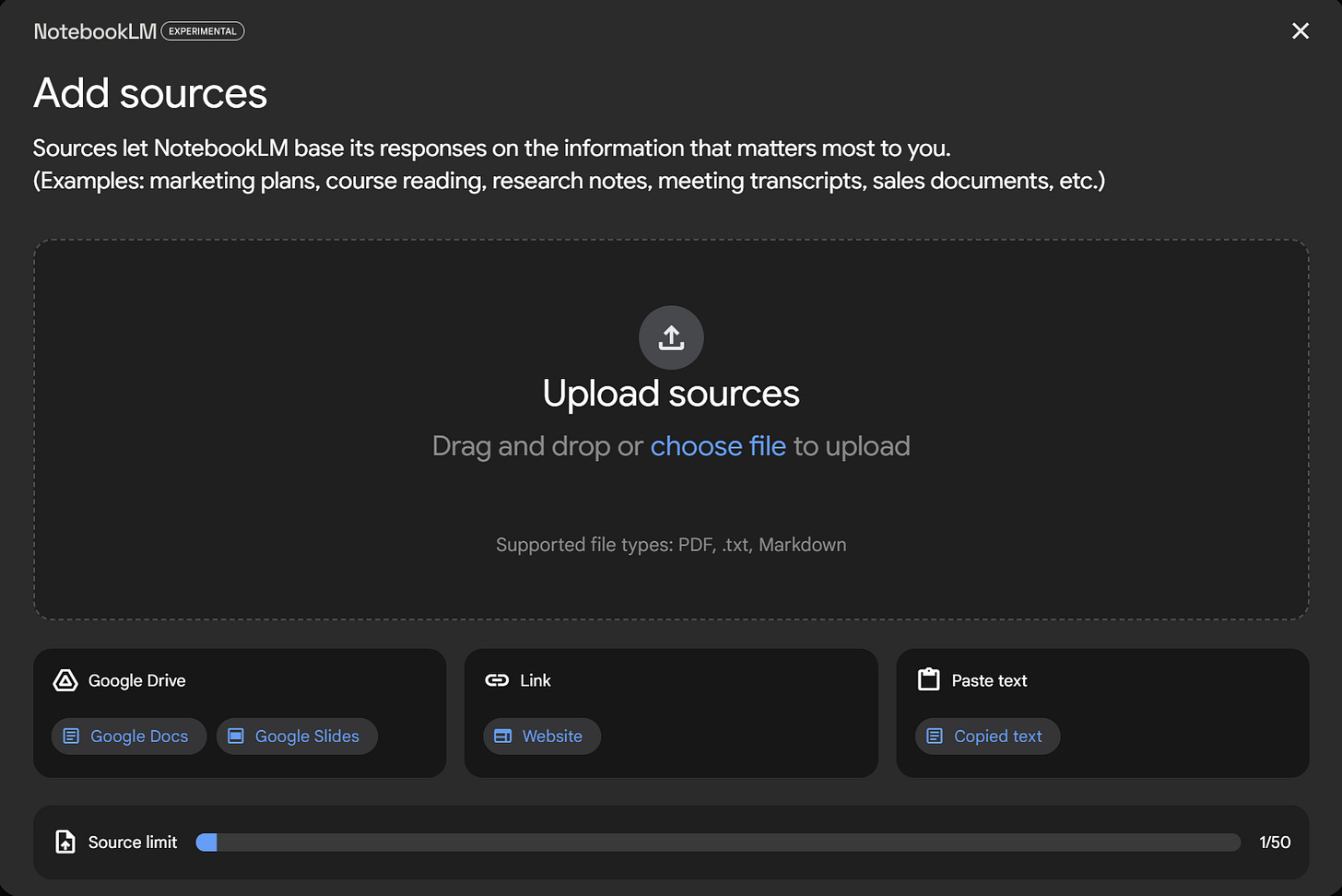

Building a Simple Notebook

First things first head over to notebooklm.google.com. You can build a notebook with up to 50 documents each document with about 500,000 words. In terms of pure number that comes out to be a generous 25 million words total. The context windows for this experiment is large and that is by design to allow users to bring in different types and flavors of sources and start taking notes and do so much more.

For my first experiment, I chose one of the Google Earth Engine community favorites: Cloud-Based Remote Sensing with Google Earth Engine. 🛰️ With its 1,210 pages, it’s a robust test case. Once I uploaded the book, NotebookLM summarized it, offered questions I could ask, and cited the exact sections as it retrieved information. You can download it here if you want to try it on your own

First things you will notice once you have upload your documents is that the notebook guide button is available for you. This automatically summarizes the document and starts offering some questions you can ask away.

I can now ask it questions that are specific only to that source and when it retrieves and augments this to the answer you will notice that I can get the citations or source sections as I hover over the citation pointers. I can further save the answer as a note which I can come back to later. Remember NotebookLM was designed as a note taking tool just way more advanced than anything you have probably used.

Did you notice me clicking the audio overview generate button at the beginning well this is what generates a conversational overview for the source/sources and this is what I used to create the podcast episodes I share later but you can listen to the audio overview for the book right here.

Building the Geo for Good 2024 Overviews Podcast

I wanted to stretch this a bit further so I have experimented with things like giving it most of the community catalog with some clean up so it can answer questions on that and what was most fun was simply pointing to the Google Slides I used to remotely co present at São Paulo Brazil and ones I will use to present in person at Dublin next week.

Audio Overview

This was launched just last week but it’s not perfect, and it can take a while to generate an overview

Here’s how to do it yourself, and then hit generate audio overview, You can also load all slides

I was part of two presentations at the São Paulo Mini Summit so I wanted to create the Podcast with just those two episodes to start. Next up with a little bit of googling and creating some episode and podcast cover art courtesy of Gemini I was able to create a podcast on Spotify containing the audio overview files. You can get started here

Things to keep in Mind

These RAG environments and audio overviews are not always accurate. While they are build to reference the source provided some of the assumptions and model training still creep up in the final audio output. But it is truly a multi modal experience and with the capability to interact with the audio overview during or post generation this should only get better.

This experiment also allowed me to understand what it would take to build query ready text with some clean ups and arranging context and maintaining cohesive text and sources for the LLMs to pull and supply me with the right answers. The models are not perfect but as far as building a source ready notebook this is nothing short of the one of the best note taking and query tools I have ever experienced and so much more.

I hope you build your own notebooks, I now have ones for the Main and the Community Catalogs and improving the source material to get better responses.

Best part, as per the terms Google does not use any of the data collected within NotebookLM to train new AI models.

Talk to me about Awesome GEE Community Catalog and other data needs. Feel free to reach out on Linkedin and Github to share your ideas, offer feedback, or dive into more ideas, experiments and discussions.

Might be the future of literature reviews.